Five Mistakes I Made Vibe Building with AI (And What I'd Do Differently)

Lessons Learned from Building with AI as a Non-Engineer

As someone with decades of product management experience, I've spent years understanding customer problems, translating business needs and documenting technical requirements. I’ve written PRDs, BRDs, and user stories. I’ve refined the continuous delivery processes for my team. So when I decided to dive into AI development to deepen my technical understanding, I figured my PM skills would transfer seamlessly.

I was (somewhat!) wrong.

Many of my core PM skills did transfer - being clear about problems I'm solving, understanding user pain points, and strategic communication all proved essential. But after several humbling experiences with my first AI-powered applications, I discovered that working with AI requires adapting these familiar skills in unexpected ways. The principles are the same, but the execution needs more precision and different safeguards than I initially expected.

And after trying several different tools, I’ve found that most, if not all, run into the same challenges.

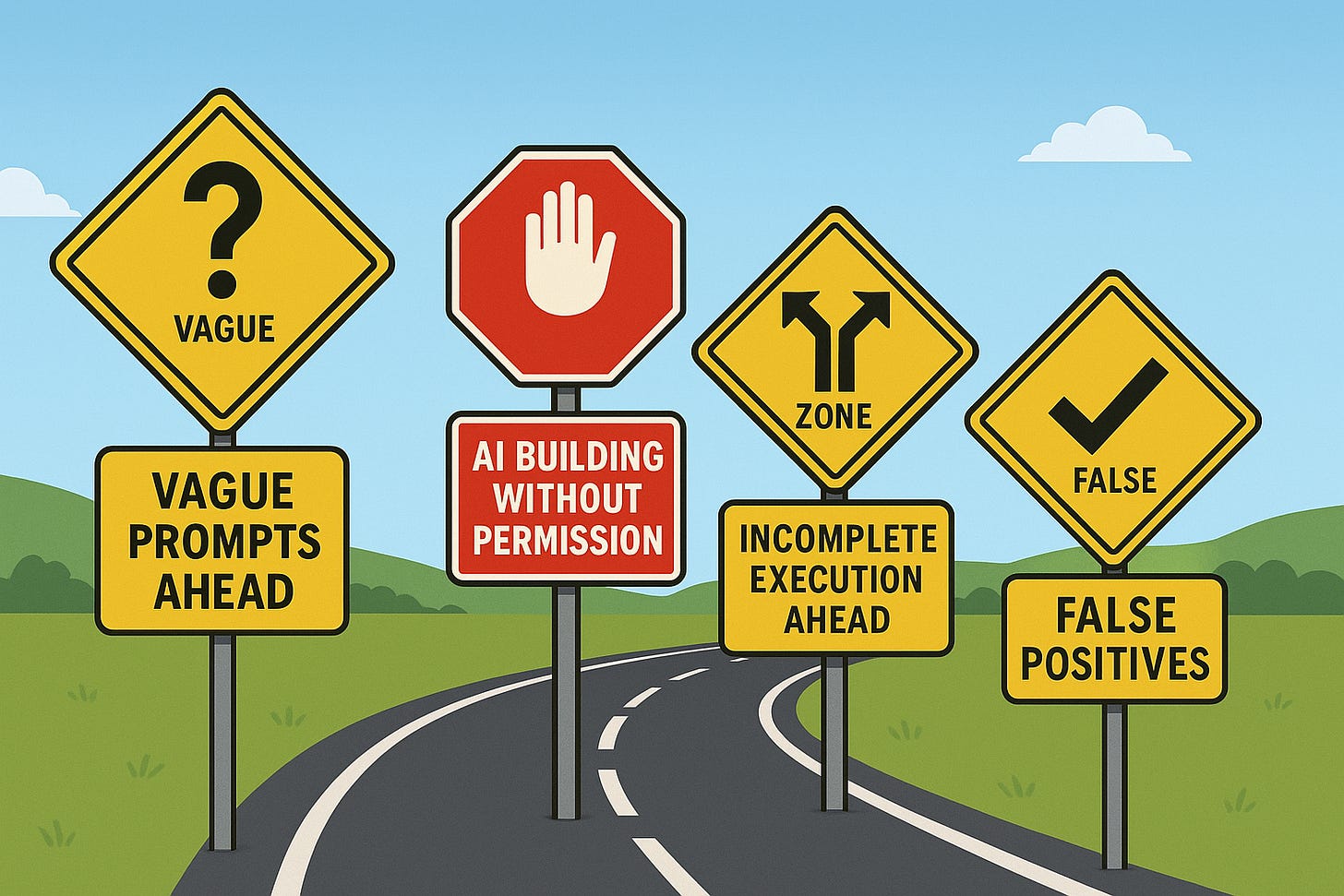

Here are the five biggest mistakes I made, and what I wish I'd known from the start.

Mistake #1: Treating AI Like a Mind Reader

What happened: In my early projects, I'd give prompts like "build a login page with OTP" or "create a calendar feature." Sometimes I'd get lucky with something surprisingly good. More often, I'd get something completely unusable that missed the mark entirely.

The lesson: AI tools lack the context that human developers naturally bring to product development. It can't read between the lines or make assumptions based on industry best practices.

What I do now: I structure my prompts like detailed PRDs. I include specific requirements, expected outputs, user personas, and examples of what "good" looks like. Instead of "build a calendar feature," I now write: "Create a calendar view with toggles for day, weeks, months, and year. The view should adjust to the date, week, or month selected. Show appointments in the calendar from the database records in the context of the date. Here's an example screenshot of the expected output..."

Mistake #2: Letting AI Run Wild During Brainstorming

What happened: I'd start a conversation with AI to explore ideas: "What if we added an AI agent to read input from an image?", and suddenly it would start writing code. By the time I realized what was happening, I had half-built features I never actually wanted.

The lesson: AI tools are eager to build, even when you're still in discovery mode. Unlike human developers who naturally pause for clarification, AI will start executing on incomplete ideas.

What I do now: I explicitly separate ideation from execution. I use clear mode indicators like "BRAINSTORMING MODE: Don't write any code yet, just help me think through options" or "BUILD MODE: Now implement the solution we just discussed." This simple framing prevents AI from jumping ahead.

Mistake #3: The "Fix Everything" Trap

What happened: I'd ask AI to update one specific feature, maybe changing a button color or adding a new field, and it would "helpfully" refactor other parts of my working code. Suddenly, functionality that had been working perfectly would break, forcing me to retest everything.

The lesson: AI tends to optimize broadly when given any opening. It's like asking a contractor to fix a doorknob and coming home to find they've renovated the entire kitchen.

What I do now: I scope my requests like I would with human developers. I'm explicit about what should and shouldn't change: "Update only the button label and styling to use the primary color. Do not modify any other CSS, JavaScript functionality, or HTML structure. Leave all other buttons unchanged. Do NOT modify these working components…"

Mistake #4: Assuming AI Follows Through on Complex Tasks

What happened: During a recent refactoring project, I gave AI detailed instructions to update all code and endpoints to new modules. Despite clear, comprehensive instructions, it only completed about 70% of the changes, leaving broken connections throughout the application.

The lesson: AI has limitations in execution for complex, multi-step tasks. It can lose track of requirements or not follow through completely, even when it seems to understand the full scope.

What I do now: I break large tasks into smaller, verifiable chunks with clear checkpoints. Instead of "refactor the entire authentication system," I'll request "Step 1: Take backups of all relevant files and update the login component to use the new auth module. Show me the changes before proceeding to Step 2." This way, I catch issues early.

Mistake #5: Trusting AI to Test Its Own Work

What happened: To speed up development, I asked the AI to create test cases and validate functionality along the way. It repeatedly showed false positives—claiming tests passed when the actual functionality was broken or incomplete.

The lesson: AI Tools have significant blind spots when evaluating its own output and tend to be overconfident about success. It's like asking someone to grade their own exam.

What I do now: I use AI for test case generation, but never trust it for test execution or validation. It helps identify what to test and write scripts, but I manually verify all outcomes. AI-generated tests are a good starting point, not a final check.

The Meta-Lesson: AI Development is Still Product Development

The biggest realization from these mistakes is that building with AI requires the same iterative, user-focused mindset as traditional product development—but applied to your relationship with the AI itself.

Just as we write user stories to communicate with developers, we need clear, specific prompts for AI. Just as we break features into sprints, we need to break AI tasks into verifiable steps. And just as we wouldn’t ship without testing, we can’t trust AI output without manual validation.

The difference is that AI is both more powerful and more literal than human developers. It can build sophisticated functionality from a well-crafted prompt, but it won’t catch obvious gaps a junior developer would flag. While AI excels at prototypes and proof-of-concepts, it often falls short in production-ready code, missing error handling, security, and scalability considerations that experienced developers would naturally include.

Learning to work with AI has made me a sharper product manager. It’s pushed me to be more precise in my requirements, more systematic in testing, and more deliberate in breaking down complex problems. These skills flow back into how I work with human teams.

The learning curve is steep, and there’s a small investment in choosing the right tool. But if you’re a PM considering diving in, my advice is simple: start small, be specific, and verify everything. Your product instincts will serve you well, and you need to adapt them to a new kind of teammate, one that’s capable but requires explicit guidance.